What happens when the tools you built to help you start making decisions on their own? That's not science fiction anymore; it's the new identity challenge enterprises face with autonomous AI agents. The identity crisis for non-human identities, such as those of MCP servers and AI agents, is rapidly becoming a significant issue that enterprises are struggling to address, pointing to the critical need to secure these identities.

Organizations need to establish Identity and Access Management (IAM) as their fundamental security framework to defend their AI systems during AI system transitions. The article defines non-human identities, explains why AI agents need identity-based security protection, and demonstrates how contemporary IAM systems protect autonomous system access to MCP servers and tools and enterprise systems.

Why Secure AI Agents for Enterprises, and Why Do it Now?

AI agents have developed an operational framework that enables businesses to operate through their innovative system. Businesses now use autonomous systems, which operate independently to perform tasks and learn from experience while making decisions without needing human intervention. The market underwent a major transformation because machines now function autonomously to process data and perform tasks based on their analysis of present circumstances.

Businesses across different sectors now use AI agents to perform regular operations, which help them achieve process optimization and cost reduction and better decisional capabilities. These systems will handle digital operation management at big scales when people start using them at their highest point.

From Human Users to Autonomous AI Agents

AI agents function independently as autonomous operators instead of being unresponsive tools. They enable users to conduct research and data analysis while browsing through information to make decisions based on their findings. The agents operate through API connections and enterprise system integration to perform tasks between different applications while they activate business processes and solve intricate problems using both environmental conditions and target goals.

These systems operate as revolutionary technology because they possess the following capabilities.

- Enable automated interactions with enterprise tools and data systems that function independently from human control.

- Use contextual awareness to make instant decisions that lead to immediate actions.

- Provide access to APIs, documentation, and external systems, allowing users to complete their work independently.

This autonomy creates both positive and negative aspects because it enables creative work but requires stronger monitoring to maintain proper management.

Why AI Agents Are Not Just "Another Service Account"

Service accounts operate independently from AI agents because AI agents modify their actions through feedback received from their environment and current situation. The models develop through new information, which enables them to detect patterns and generate unique responses for various situations. These systems become more powerful but also more dangerous when security measures are not in place.

The main differences between these elements exist.

- Operate through dynamic decision-making because agents learn new information while adapting to changing situations.

- Display contextual intelligence because they understand company goals and apply that understanding to guide their activities.

- Present a larger attack surface because their wide network access and automated operations increase the potential damage from a breach.

Organizations need to develop protective frameworks that secure these self-operating entities while they continue to learn and operate independently.

What are Non-Human Identities (NHI)?

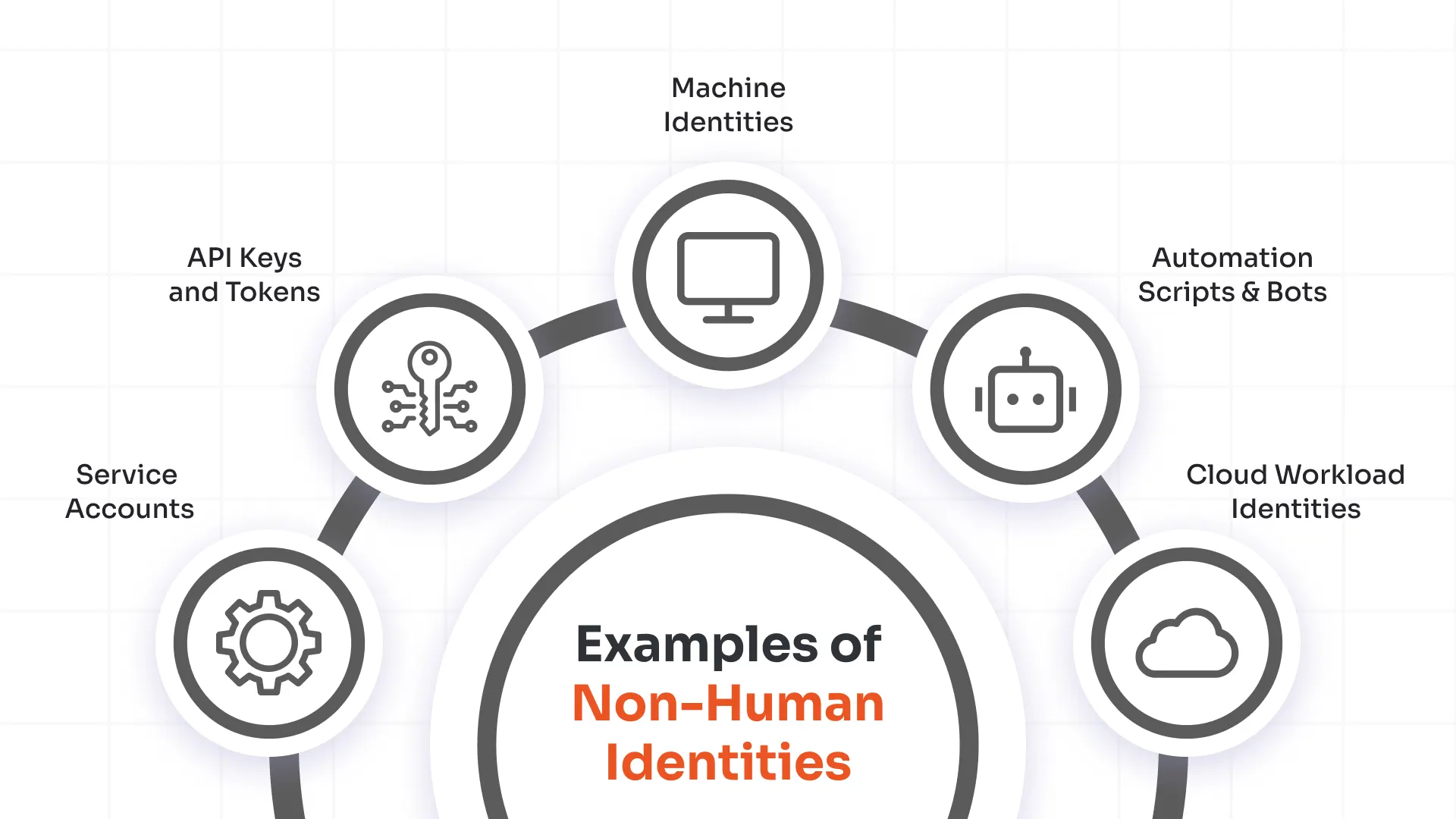

Non-Human Identities (NHIs) consist of digital entities, which include bots and services, workloads, and AI agents that operate with enterprise systems through automated processes without requiring human participation. Organizations that automate their workflows and implement cloud-native tools need NHIs to protect their systems and operations. Organizations need to manage these elements because they protect against credential abuse attacks, which prevent users from getting unauthorized elevated privileges or accessing protected data.

Defining Non-Human Identities in Modern IAM

The Identity and Access Management (IAM) system uses NHIs to create machine-based accounts that execute system and application operations. Examples include:

- Bots, which run repetitive automated tasks and handle functions such as customer query responses.

- Workloads and services, which communicate securely across cloud and hybrid environments.

- AI agents, which access APIs to analyze data, make decisions, and execute actions autonomously.

- Microservices, compute processes (MCPs), and backend tools, which authenticate using service credentials to perform specific operational tasks.

The NHI system requires authentication and authorization and lifecycle management functions, which perform automated human user tasks at scaled levels. Security vulnerabilities remain undetected threats because organizations do not have adequate governance systems, which would help them detect these issues.

How AI Agents Fit into the NHI Landscape

AI agents modify IAM system operational procedures through their available capabilities, which digital entities execute. They are not human users, and they are not just API keys. It operates through individual AI agents, which function as policy-enforced identities that provide controlled access with specific security permissions and trust limits. It enables complete accountability of all AI operations because it includes compliance and audit functions.

The IAM platform miniOrange provides modern access control systems that support both human and non-human identity management operations. It implements principle of least-privilege access while providing complete monitoring of automated systems and establishing secure links between human operators and system processes and equipment.

Understanding MCP and AI-to-Tool Communication

MCP enables AI agents to perform actions in addition to their response capabilities, which represents a significant advancement for AI system integration with operational systems.

What Is the Model Context Protocol (MCP)?

MCP operates as a single system, which allows AI models to interact with tools and access data by using common interfaces. It allows its components to stay in real-time structured communication instead of requiring them to send separate responses. AI agents can directly access APIs and databases and enterprise tools through this method, which allows them to execute real-time operations, including data retrieval, workflow activation, and security control enforcement.

Why Does MCP Change the Security Model?

The MCP enables tools to operate as active execution platforms through the platform. It executes AI-generated instructions, which makes these components active participants in the security perimeter that needs protection.

It grants AI agents operational control through its ability to let them start executing tasks, which results in their obtaining enhanced system permissions and decision authority.

It depends on identity as its core authorization system. AI agents require authorization from users or the RBAC system to perform their assigned work tasks. The new trust boundary forms based on identity factors instead of the model structure.

Industry reference:

The security framework progresses through guidelines from WorkOS MCP security and authentication, which demonstrate how modern AI systems require identity authentication and permission control systems to operate.

New Security Risks Introduced by AI Agents and MCP

The current business environment enables enterprises to achieve better workflow operations through the implementation of AI agents together with Model Context Protocol (MCP) but these systems create security vulnerabilities that organizations fail to detect correctly. These agents function independently between systems, which produces both powerful system capabilities and dangerous system risks when they run without adequate oversight. The ecosystem contains three main security threats that need to be evaluated.

Prompt Injection and Instruction Manipulation

Attackers can deceive AI agents through their use of deceptive instructions, which they hide inside their prompts and data. The agent becomes vulnerable to executing unauthorized commands and revealing sensitive information after accessing that content. The deceptive inputs that enter AI systems through these workflows use trust to bypass security systems that fail to detect them.

The attackers use content manipulation to trick AI systems through their implementation of hidden commands, which they embed into user input data, documents, and web pages to alter AI task interpretation.

The actual damage from identity abuse occurs when AI systems execute fake instructions, which then use their authorized identity to perform attacks against the AI agents.

Over-Privileged AI Agents

AI agents function under wide authorization, which allows them to execute tasks that go beyond their initial designated duties. The existing privileges create an environment that allows attackers to use minor system vulnerabilities to achieve major security breaches.

The agents maintain unrestricted access to all applications, APIs, and user information.

It depends on static keys and tokens, which extend access duration but do not include suitable expiration mechanisms.

It lacks user intention verification before performing requested operations, which makes system abuse possible.

Lack of Visibility and Auditability

Organizations face difficulties when they need to identify both the origin and exact timing of AI-based system operations. The current audit systems were designed for static systems, which do not match the operational behavior of autonomous systems that operate between different tools and environments.

- Who triggered the action? The process of distinguishing between authentic operations and AI-initiated operations proves to be difficult to detect.

- Which tool was accessed? The tracking of activities across different platforms usually lacks full functionality.

- Under whose authority? The lack of established delegation and accountability systems prevents anyone from determining who should take responsibility.

Why Traditional Security Models Fail for AI Agents?

The current IAM systems lack the capability to operate in autonomous machine-driven systems, which they were not designed to support. It relies on human behavior patterns, interactive logins, MFA prompts, and static credentials; however, AI agents operate continuously without interruption, rendering these methods ineffective. Organizations that integrate AI systems into their operations through workflow and system integration create security vulnerabilities for their business operations.

Static API Keys and Shared Tokens

Most AI integrations still depend on static API keys or shared tokens. It lacks dynamic control features, which allow unauthorized users to maintain unlimited access to these credentials. It lacks any mechanism to verify whether the entities or objects are using the key for authentication. Attackers can impersonate authorized AI agents after gaining access, which lets them move between systems while staying undetected by security systems.

No Identity Context

The current access methods do not provide proof of the agent's identity, their actions, or their intended purpose. Security systems require contextual data about the source, purpose, and authorization path in order to enforce policies at detailed levels. Organizations struggle to determine user access rights because they do not have verification methods, which creates security risks through data breaches and unauthorized privilege misuse.

No Revocation

API keys do not provide the ability to revoke access in real time. When a compromised key comes into the picture, teams must manually rotate credentials across various systems, usually after the compromise has already occurred. AI agents need instant policy-based access, which must stop immediately when risk detection takes place.

No Audit Trail

Static tokens prevent system operation tracking because they do not reveal which user executed particular system actions. It maintains token usage records through logs, but lacks information about which system or user executed each operation. The logs fail to maintain accountability because they create an environment where compliance auditing becomes extremely difficult when AI systems are involved.

IAM Designed Only for Humans

The current IAM systems operate under the belief that users will take charge of their security needs. Require users to perform human tasks, which include entering their login credentials, finishing MFA authentication steps, and performing interactive tasks. AI agents operate independently from human users because they conduct automatic authentication and function without requiring human supervision.

- The security system relies on MFA to authenticate user trust, but AI agents lack the ability to complete authentication challenges. The current loophole forces developers to turn off MFA entirely, which decreases the security protection for their systems.

- Require users to perform interactive login procedures because IAM systems based on traditional methods need consumers to maintain active sessions while using cookie authentication, which does not work for self-operating toolchains.

- Poor fit for autonomous systems: AI agents require continuous, context-aware access, not temporary, session-based logins. The current human IAM systems lack the ability to establish automatic trust relationships, which would work for handling large numbers of automated API requests.

How IAM Secures AI Agents and Non-Human Identities

Security operations now depend on AI agents, which serve as vital components that help with decision-making and automate their operational processes. The AI agent becomes exposed to attacks because weak identity management systems cannot defend these users. The Identity and Access Management (IAM) system protects AI agents and non-human identities through its implementation of trust-based security, its enforcement of minimum privilege access, and its ability to monitor all system interactions.

Identity-Based Access for AI Agents

IAM provides each AI agent with its own distinct digital identity, which prevents the use of generic credentials or shared keys. Organizations can establish a distinct separation between user and agent identities, which enables them to distinguish between human-controlled actions and automated system choices. Reduces the need for complex accountability systems because it protects agents from credential theft attacks, which reduce the damage when such incidents occur.

Least-Privilege Authorization for Tools and MCP Servers

IAM enforces least-privilege access through scope-based and role-based permissions, which function as access control mechanisms. Provide agents with access to their required data and tools but block all additional information. Rely on policies that establish dynamic permission rules, which automatically adjust based on changing contextual elements and risk assessment levels. The policy-based enforcement system provides complete system access control and maintains audit capabilities through all environmental changes.

Token Lifecycle Management

IAM provides security through its token system, which issues brief access tokens that function based on environmental conditions for AI agents. The validation process for each token confirms both the agent's identity and its operational environment and its designated application purpose. Enable automatic expiration and basic revocation functions, which simplify access management through simplified access permissions and protect users from credential theft attacks.

Audit Logs and Observability

Organizations need to establish complete operational visibility to build trust as their fundamental trust-building requirement. IAM tracks all agent activities through authentication requests and API These requests are recorded in detailed audit logs. Security teams analyze data and find unusual activities using logs that meet compliance standards, which also help them confirm all actions taken by agents for regulatory reporting.

How miniOrange IAM Enables Secure AI and MCP Architectures

The fast automated decision-making system of AI-driven ecosystems creates an expanded attack surface because of its efficient operation. The miniOrange IAM system functions as a single security interface that protects all human-AI, AI-MCP, and MCP-enterprise system interactions. Use identity-based access control, which enables organizations to create policy-based security zones that support governance needs while allowing them to maintain their rapid innovation pace.

Unified IAM for Human and Non-Human Identities

The miniOrange IAM system provides identity management solutions that support both human users and machine users while eliminating AI pipeline silos.

The authentication system depends on AI agents, which authenticate to enterprise services through identity-based access instead of using static keys for secure communication.

Use MCP servers to handle AI model hosting environments, which operate under IAM policy control for complete access monitoring and auditing purposes.

Provide complete integration with current IAM tools, which enables secure access and single sign-on functionality between cloud and on-premises workloads.

Secure Machine-to-Machine Authentication

AI ecosystems need security protocols that extend past API key protection because their machine-to-machine communication systems require improved defense mechanisms. miniOrange IAM implements secure, standards-based authentication.

Enable AI components to interact with each other through authenticated access tokens by using OAuth-based access.

Use scoped tokens instead of API keys for access control because this approach allows users to execute particular operations on restricted data ranges, which minimizes system vulnerability to threats and blocks unauthorized system access.

Policy-Driven Access Control for AI Tools

AI-powered workflows require systems that provide both flexible access to data and services and proper control mechanisms. The access control system of miniOrange IAM operates through adaptive policies that determine access permissions.

Grant permissions through dynamic methods that use roles and attributes and contextual risk assessment.

Implement Zero Trust through ongoing identity and device authentication, which provides access only after successful verification, thus removing all forms of implicit trust.

Enable complete governance through centralized design, which allows users to track all AI and MCP systems they control while they enforce policies and monitor compliance.

IAM Best Practices for AI Agents and MCP Servers

AI agents operate within enterprise systems through workflow integration, but their connection to them generates particular security risks that affect identity and access management systems. Your network security requires you to view all AI system connections as security risks that extend your network vulnerability. When working with AI agents and MCP servers, adhere to the following core IAM best practices.

1. Treat AI Agents as Untrusted by Default

Always apply the Zero Trust mindset. Security protection does not come automatically with AI agents that users obtain from established vendors or platform providers. Isolate users through access restrictions, which should limit their privileges to basic functions while blocking their access to data modification features. Establish strict policies that determine agent capabilities, and always check their executed actions.

2. Never Share User Credentials with Agents

User credentials need to stop direct transmission from happening to AI or MCP systems. Use delegated access through standardized protocols, which include OAuth 2.0 and OpenID Connect. The IAM defends stolen tokens and exposed passwords from enabling full account access while it determines which request originated from where.

3. Use Identity, Not API Keys

Stop using static API keys as your authentication method. Authenticate through short-lived tokens, which link to service accounts or machine identities. The authentication method, known as identity-driven authentication, enables systems to understand the identities of their callers, thereby simplifying the process of creating detailed access restrictions and tracking system activities.

4. Check agent credentials at regular intervals while performing periodic rotations of these credentials.

The independent operation of agents allows their credentials to stay active even after their designated time has expired. Monitor credential usage patterns while performing two functions: removing inactive tokens and running scheduled rotations of agent secrets and certificates. The security checks need to be integrated into your CI/CD pipelines and configuration management tools for real-time continuous security monitoring.

Maximize the Potential of Future AI Agents and Identities

Organizations today use AI-first operational models, which let intelligent agents run their business operations. IAM is the basic security system that allows safe use of AI by combining Zero Trust principles with managing the agents.

Identity as the Control Plane for AI

The control plane for identity operates as a central system that handles all AI workflow operations and API requests and model execution commands. Teams use runtime authorization, which demands agents to get particular time-based permissions for executing all their tasks. The setup enables complete management of credentials starting from their first creation until their complete life cycle ends with their final removal.

From Zero Trust for Users to Zero Trust for Agents

Organizations have expanded Zero Trust security to protect AI agents in addition to user accounts because Gartner projects AI agents will perform 30% of enterprise work without human supervision by 2026. Agents demand continuous verification, dynamic identities via Decentralized Identifiers (DIDs), and behavioral monitoring to counter risks like credential theft or rapid damage. The NIST AI RMF framework enables organizations to implement IAM controls that protect non-human entities, thus reducing their attackable surfaces.

Why IAM Defines Secure AI Adoption

Strong IAM allows for the fast and safe use of AI by using its AI-driven system to automatically spot threats and control access in a way that gives the least amount of permission needed. The IAM system enables agents to increase risk levels by 100 times more than human operators because they operate at high speeds with extensive capabilities. Leaders who base their leadership on identity principles create organizations that achieve both innovative progress and follow all necessary rules.

Getting the Basics Right with IAM for Secure AI Adoption

AI operates as an operational component that actively participates in business workflow operations. The current AI systems operate as decision-making entities, which also enable data retrieval and system activation functions. The transformation of these entities into actors with human-like characteristics, including roles and privileges and identities, makes them function similarly to human users.

Organizations that implement Model Context Protocol (MCP) and similar systems will experience an immediate increase in their system vulnerability levels. Without proper identity management, security risks arise as unauthorized activities can exploit AI agents, API interactions, and data requests. The current perimeter-based security system fails because it lacks the ability to confirm the identity of request originators.

Identity and Access Management (IAM) provides organizations with their only solution to achieve scalable control for this emerging environment. IAM protects both human users and machine systems through its process of digital identity assignment and verification and access enforcement, which grants them authorized access to required resources at the right time. The IAM system protects your AI ecosystem through its Zero Trust framework, which uses detailed Role-Based Access Control (RBAC) and continuous authentication procedures.

The authentication system of miniOrange enables organizations to move their authentication systems into secure operational systems. It unifies identity management functions while it uses artificial intelligence to verify service access, and it enforces flexible security rules that affect all system users and devices. Enterprises can use AI for innovation through the deployment of trusted IAM foundations, which create security and compliance protection systems.

Leave a Comment